agility-docs

Autoloader

ℹ️ Autoloader is currently using StreamSets to ingest data. This will be replaced by a custom solution in the future.

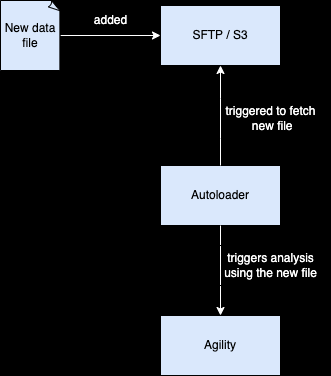

Autoloader Summary

Autoloader is a tool used for automated data ingestion, which provides an alternative to manually uploading data through the UI.

The excerpt provides an example command for creating a pipeline in StreamSets, which includes various parameters such as the SFTP or S3 server URL, credentials, file patterns, and pipeline ID.

The command is intended to be run inline using kubectl exec in a Kubernetes deployment.

Prerequisites

- Kubectl

- AGILITY application installed

High Level Diagram

Create a new source pipeline

The Autoloader supports two types of sources: SFTP and S3. The following sections describe how to create a new pipeline for each type of source.

Common parameters

| Environment Variable | Description | Default Value |

|---|---|---|

| AGILITY_NS | Namespace where AGILITY is deployed | agility |

| STREAMSETS_SECRET_NAME | Secret name with Streamsets admin user password | agility-autoloader-secrets |

| STREAMSETS_LOGIN | User to access Streamsets | admin |

| STREAMSETS_PASSWORD | If provided, uses this as password to create pipeline | If not provided, get password from STREAMSETS_SECRET_NAME |

| STREAMSETS_URL | URL to access Streamsets API | http://localhost:18630 |

| AGILITY_BACKEND_URL | Internal backend server URL. Leave the default value. | http://agility-backend |

| SOURCE_TYPE | Source type where AGILITY will pull the file from (values: SFTP, S3, default: SFTP) | SFTP |

| PIPELINE_ID | ID of the SFTP pipeline. Should contain alphanumeric, dash, or underscore characters. MANDATORY | <empty> |

| AGILITY_SERVICE_KEYS | Comma-separated list of services/models needed for given auto-loader instance. Note: this is required property in case auto service detection is not enabled; if auto service detection is enabled, an empty value for service keys will result in auto service selection | <empty> |

| AGILITY_USERNAME | User under which predictions will be processed and made visible. Other users will not see these predictions. | <empty> |

* Service Keys

ℹ️ Values for these keys might be different depending on customizations. Generic values can be used as default unless specified by B-Yond customer support.

| service_key | service_name | description |

|---|---|---|

| 4g_5g_nsa_connectivity | 4G & 5G NSA Connectivity | Supported Protocols: S1AP, GTPv2, DIAMETER, HTTP2, PFCP |

| 5g_sa_connectivity | 5G SA Connectivity | Captures AMF, SMF, UDM and PCF |

| 5g_sa_data | 5G SA Data | Supported Protocols: TCP, ICMPv4, ICMPv6, DNS, HTTP2, PFCP, NGAP, GTPV2 |

| 5g_sa_mobility | 5G SA Mobility | Supported Protocols: NGAP, NAS-5GS, HTTP2, PFCP, GTPV2, S1AP, SIP, DIAMETER |

| volte | Voice & Video over LTE | Captures MME, S-GW, P-GW, OCS, HSS, S-CSCF, TAS, IBCF, MGCF |

| vonr | Voice & Video over New Radio | Captures GNodeB, AMF, SMF, UPF, UDM, PCF, I/S, TAS |

To create SFTP pipeline in StreamSets run the following command (all inline)

SFTP Pipeline parameters

| Environment Variable | Description | Default Value |

|---|---|---|

| SOURCE_TYPE | Set the value to SFTP |

always set SFTP |

| SFTP_RESOURCE_URL | Source of files, includes SFTP server and path | <empty> |

| SFTP_USERNAME | Source SFTP username | <empty> |

| SFTP_PASSWORD | Source SFTP password, if SFTP_PRIVATE_SSH_KEY or SFTP_PRIVATE_SSH_KEY_FILE is provided, this parmaeter is discarded | <empty> |

| SFTP_PRIVATE_SSH_KEY | Private ssh key for SFTP_USERNAME. When provided Private key authentication mode will be used instead of password authentication | <empty> |

| SFTP_PRIVATE_SSH_KEY_FILE | File containing private ssh key for SFTP_USERNAME. When provided Private key authentication mode will be used instead of password authentication | <empty> |

| SFTP_FILE_PATTERN | File pattern to download files | *.pcap |

| Environment Variable | Description |

|---|---|

SOURCE_TYPE |

Set the value to SFTP |

STREAMSETS_PASSWORD |

Internal password for StreamSets. Leave the value as specified in the examples. |

SFTP_RESOURCE_URL |

Full URL of the SFTP server where files to ingest are located. |

SFTP_USERNAME |

Username to connect to the SFTP server. |

SFTP_PRIVATE_SSH_KEY |

Private key to connect to the SFTP server. |

SFTP_PASSWORD |

Password to connect to the SFTP server. |

SFTP_FILE_PATTERN |

File pattern to filter out files that are not pcap files. Use the pattern *.(pcap\|pcapng\|cap\|zip). |

PIPELINE_ID |

ID of the SFTP pipeline. Should contain alphanumeric, dash, or underscore characters. |

AGILITY_BACKEND_URL |

Internal backend server URL. Leave the default value. |

AGILITY_USERNAME |

User under which predictions will be processed and made visible. Other users will not see these predictions. |

AGILITY_SERVICE_KEYS * |

Comma-separated list of services/models needed for given auto-loader instance. Note: this is required property in case auto service detection is not enabled; if auto service detection is enabled, empty value for service keys will result in auto service selection |

SFTP Examples

This example is to connect to an SFTP server using username and private-key type of credentials:

kubectl exec -n agility agility-autoloader-0 -- bash -c "cd /byond && \

AGILITY_NS=agility \

STREAMSETS_PASSWORD=$(kubectl get secrets -n agility agility-autoloader-secrets -o jsonpath="{.data.admin\.password}" | base64 -d) \

SOURCE_TYPE=SFTP \

SFTP_RESOURCE_URL=sftp://sftp.b-yond.com:22/private/upload/sample \

SFTP_USERNAME=my-username \

SFTP_PRIVATE_SSH_KEY='$(cat ../sftp_key)' \

SFTP_FILE_PATTERN='*.(pcap|pcapng|cap|zip)' \

PIPELINE_ID=test-pipeline \

AGILITY_BACKEND_URL=http://agility-backend \

AGILITY_USERNAME='agility-admin@b-yond.com' \

./create_replace.sh"

This other example is to connect to an SFTP server using username and password type of credentials:

kubectl exec -n agility agility-autoloader-0 -- bash -c "cd /byond && \

AGILITY_NS=agility \

STREAMSETS_PASSWORD=$(kubectl get secrets -n agility agility-autoloader-secrets -o jsonpath="{.data.admin\.password}" | base64 -d) \

SOURCE_TYPE=SFTP \

SFTP_RESOURCE_URL=sftp://sftp.b-yond.com:22/private/upload/sample \

SFTP_USERNAME=my-username \

SFTP_PASSWORD=my-password \

AGILITY_SERVICE_KEYS=comma-seperated-service-list \

SFTP_FILE_PATTERN='*.(pcap|pcapng|cap|zip)' \

PIPELINE_ID=test-pipeline \

AGILITY_BACKEND_URL=http://agility-backend \

AGILITY_USERNAME='agility-admin@b-yond.com' \

./create_replace.sh"

Adjust the values specially for SFTP_RESOURCE_URL, SFTP_USERNAME, SFTP_PRIVATE_SSH_KEY and AGILITY_USERNAME accordingly.

Create a new S3 source pipeline in cluster

To create a new S3 source pipeline, ensure you have the necessary AWS S3 configuration details. The script will configure and deploy a pipeline to pull data from a specified S3 bucket.

S3 Pipeline parameters

| Parameter | Description | Default Value |

|---|---|---|

| SOURCE_TYPE | Set the value to S3 |

always set S3 |

| S3_ENDPOINT_URL | URL of the S3 service endpoint | <empty> |

| S3_ACCESS_KEY_ID | AWS access key ID for S3 authentication | <empty> |

| S3_SECRET_ACCESS_KEY | AWS secret access key for S3 authentication | <empty> |

| S3_BUCKET | Name of the S3 bucket from which to pull data | <empty> |

| S3_FOLDER_PREFIX | Prefix of the folder in the S3 bucket (optional), examples : US/East/MD/ , US/ | <empty> |

| S3_FILE_PATTERN | Pattern to match files in the S3 bucket, examples: * , /.pcap , **/prod/.pcap , US//prod/*.pcap | * |

| S3_READ_ORDER | Order to read files (TIMESTAMP or LEXICOGRAPHICAL) | <empty> |

| S3_PIPELINE_TEMPLATE_FILE | JSON template with pipeline definition. Do not change this unless you know what are you doing | S3_template.json |

S3 Examples

kubectl exec -n cv agility-autoloader-0 -- bash -c "cd /byond && \

AGILITY_NS=cv \

STREAMSETS_PASSWORD=$(kubectl get secrets -n cv agility-autoloader-secrets -o jsonpath="{.data.admin\.password}" | base64 -d) \

SOURCE_TYPE=S3 \

S3_ENDPOINT_URL=https://s3.us-east-2.amazonaws.com \

S3_ACCESS_KEY_ID=your-access-key-id \

S3_SECRET_ACCESS_KEY=your-secret-access-key \

S3_BUCKET=my-bucket \

S3_FOLDER_PREFIX=US/ \

S3_FILE_PATTERN=**/prod/*.pcap \

S3_READ_ORDER=TIMESTAMP \

PIPELINE_ID=test-pipeline \

AGILITY_BACKEND_URL=http://agility-backend.cv \

AGILITY_USERNAME=someone@b-yond.com \

./create_replace.sh"

Adjust the values specially for S3_ENDPOINT_URL, S3_ACCESS_KEY_ID, S3_SECRET_ACCESS_KEY, S3_BUCKET, S3_FOLDER_PREFIX, S3_FILE_PATTERN, S3_READ_ORDER and AGILITY_USERNAME accordingly.

To start the pipeline, port-forward to StreamSets UI and hit the start button. Currently there is no shell command to start the pipeline.

- After creating the pipeline, port-forward to agility-autoloader-0 with this command:

kubectl port-forward -n agility agility-autoloader-0 18630:18630

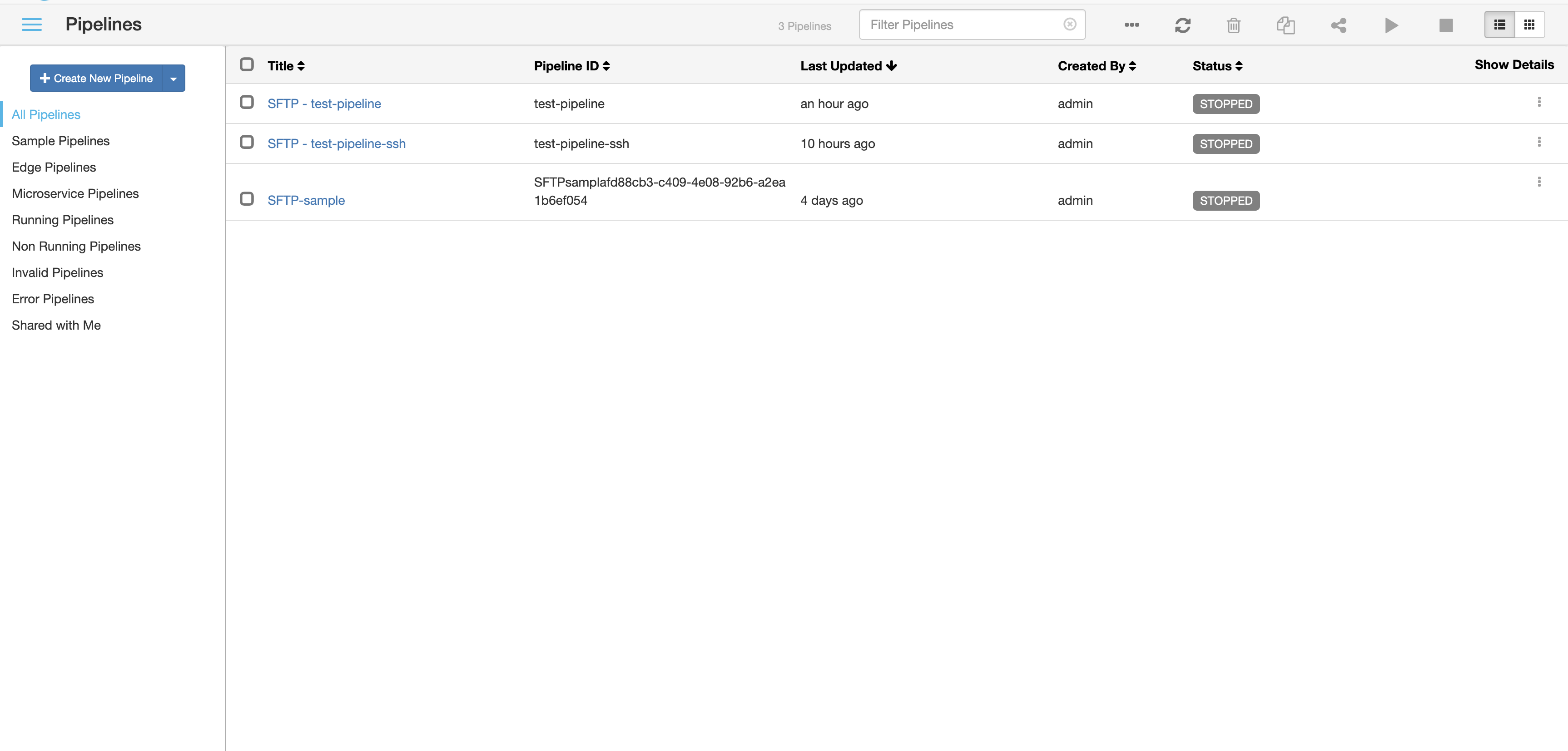

Go to localhost:18630 and this page should show:

- The username should be

adminand password should be taken from this command:

kubectl get secrets -n agility agility-autoloader-secrets -o jsonpath="{.data.admin\.password}" | base64 -d

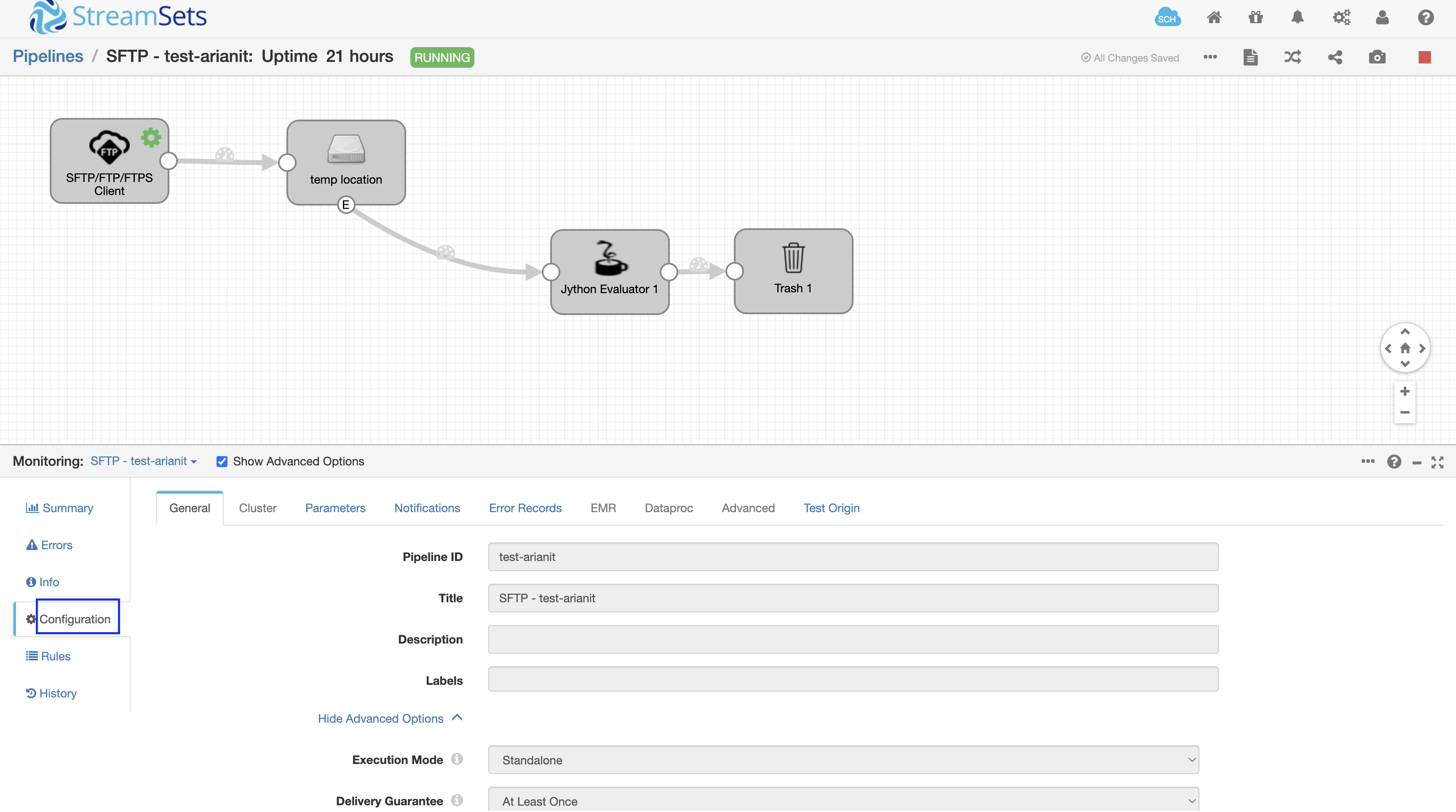

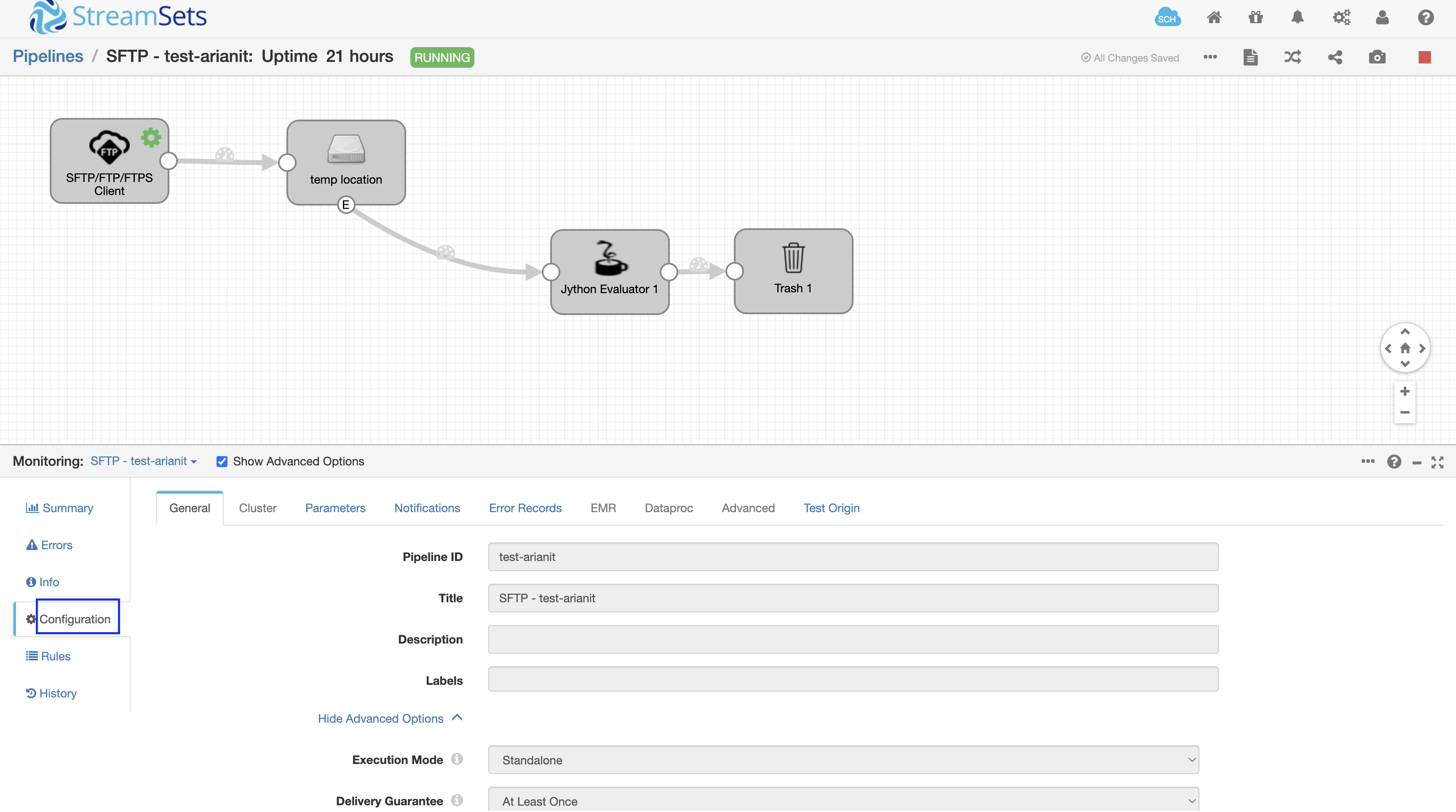

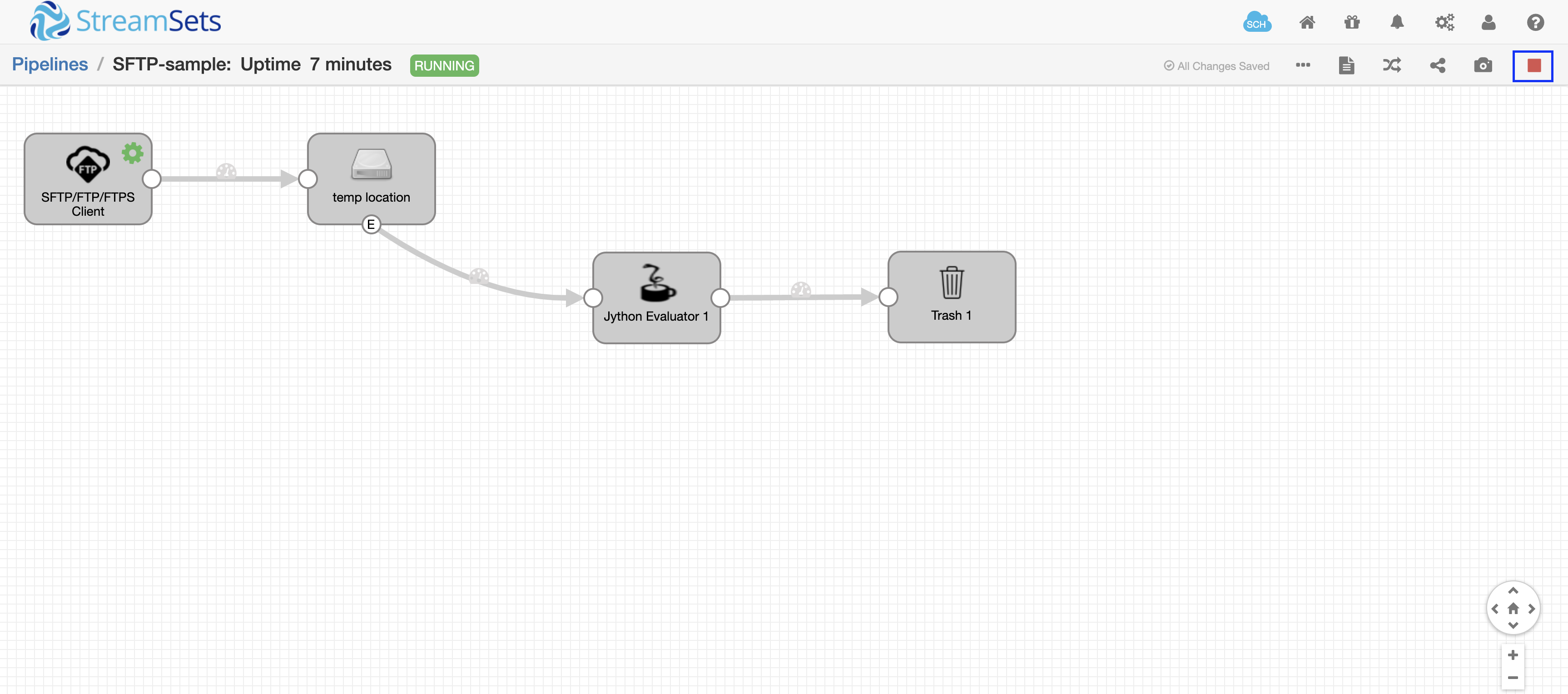

- After logging in this page should show:

-

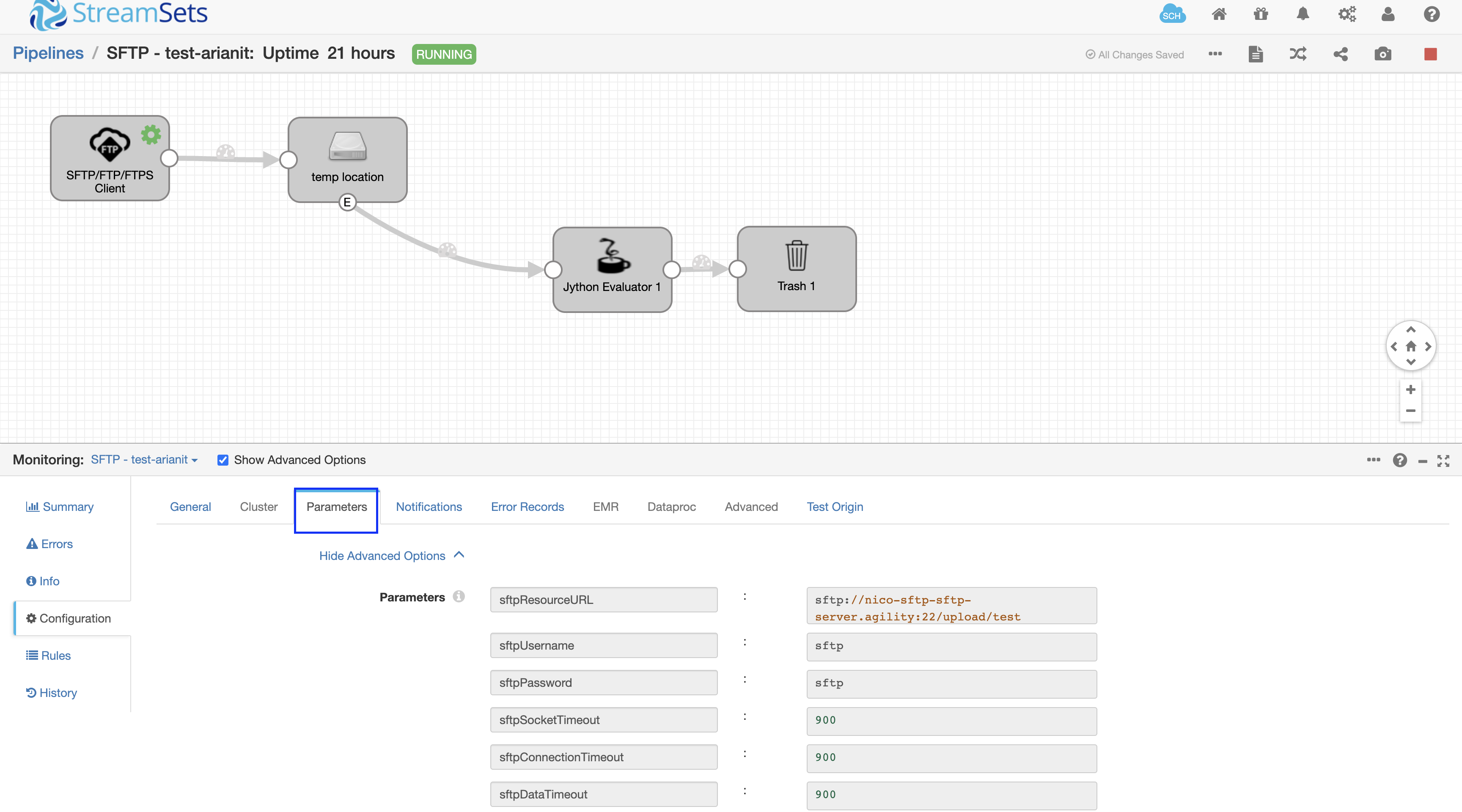

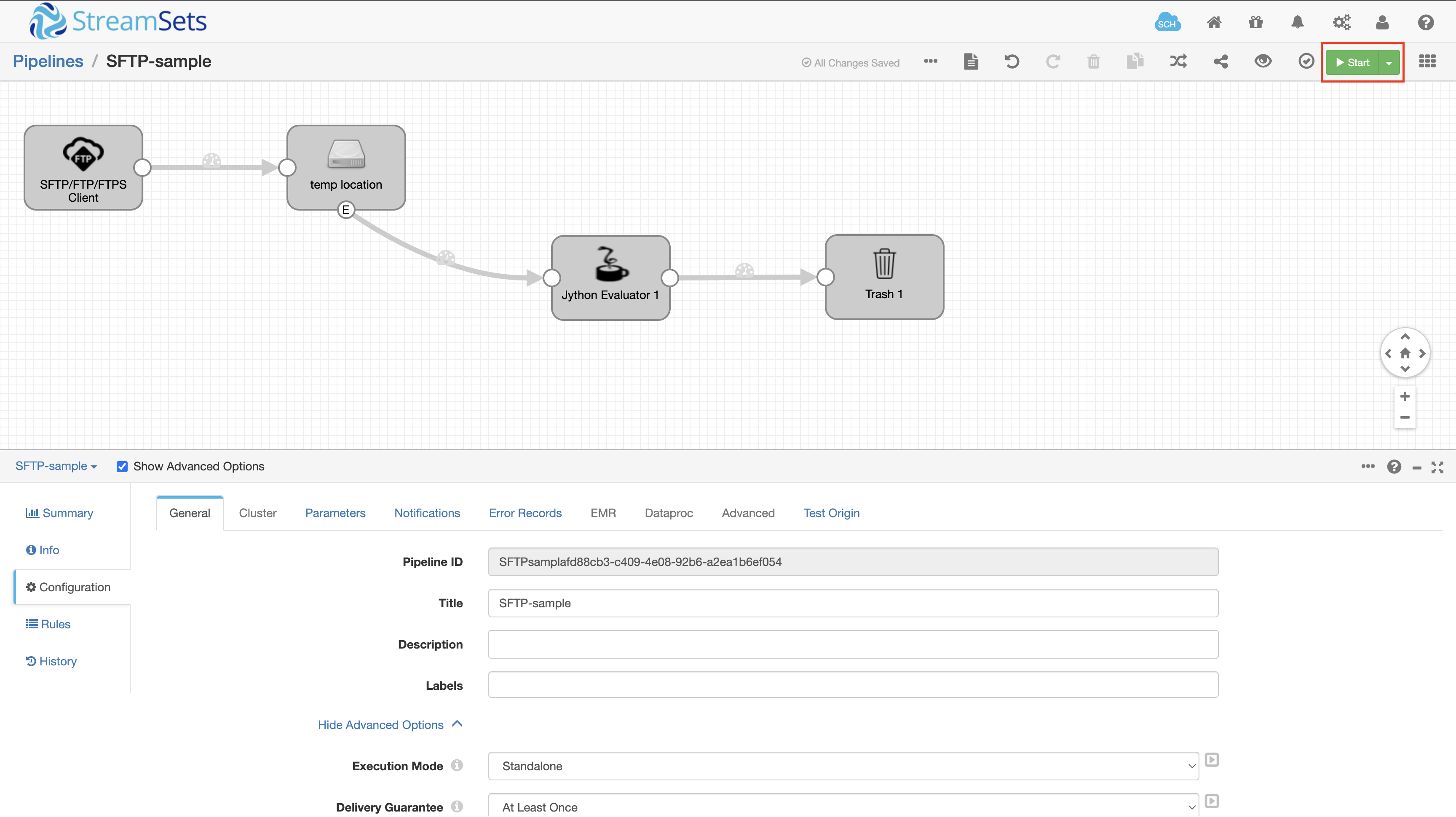

Go to the pipeline you have created

-

The pipeline page should show. To start the pipeline click the start button:

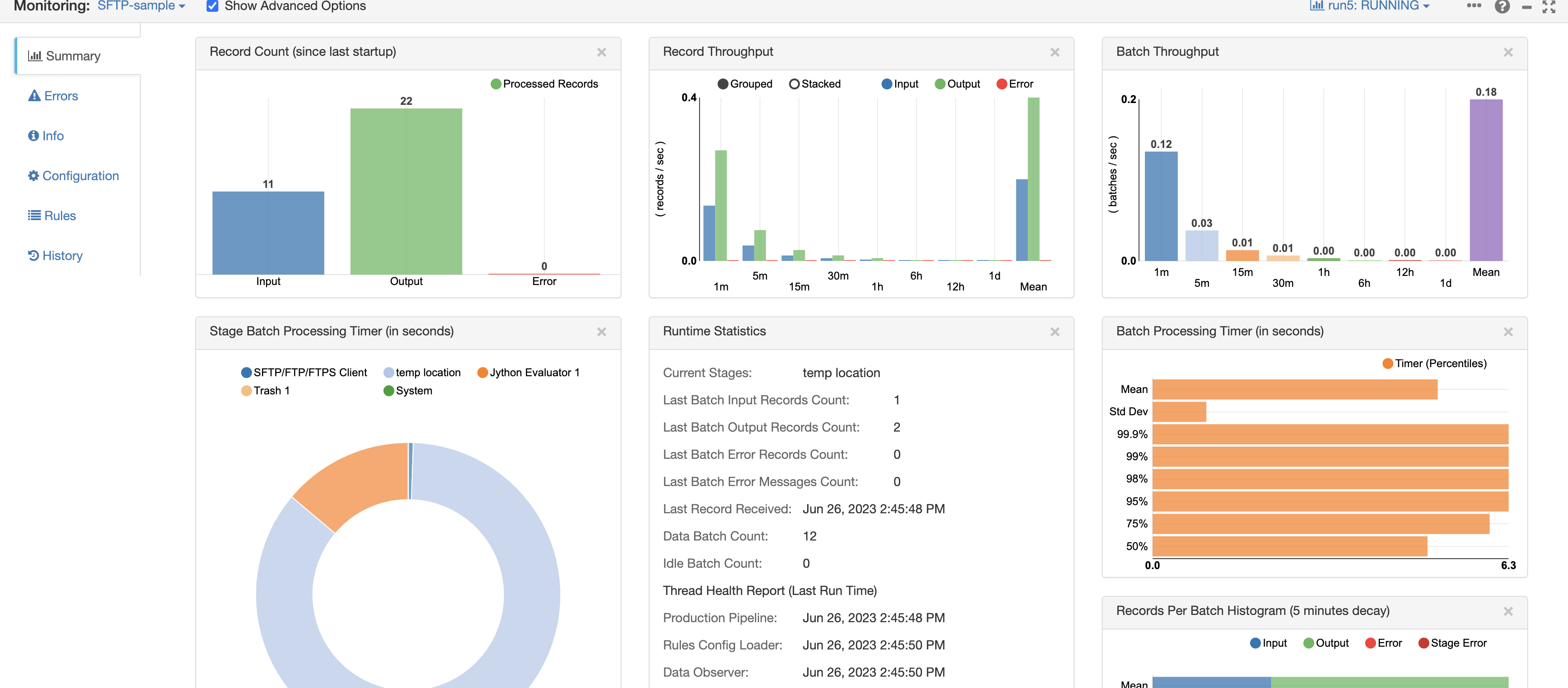

- While running the pipeline, the statistics of the pipeline can be seen here:

To check the logs while running the pipeline, run this command:

kubectl logs -n agility pods/agility-autoloader-0

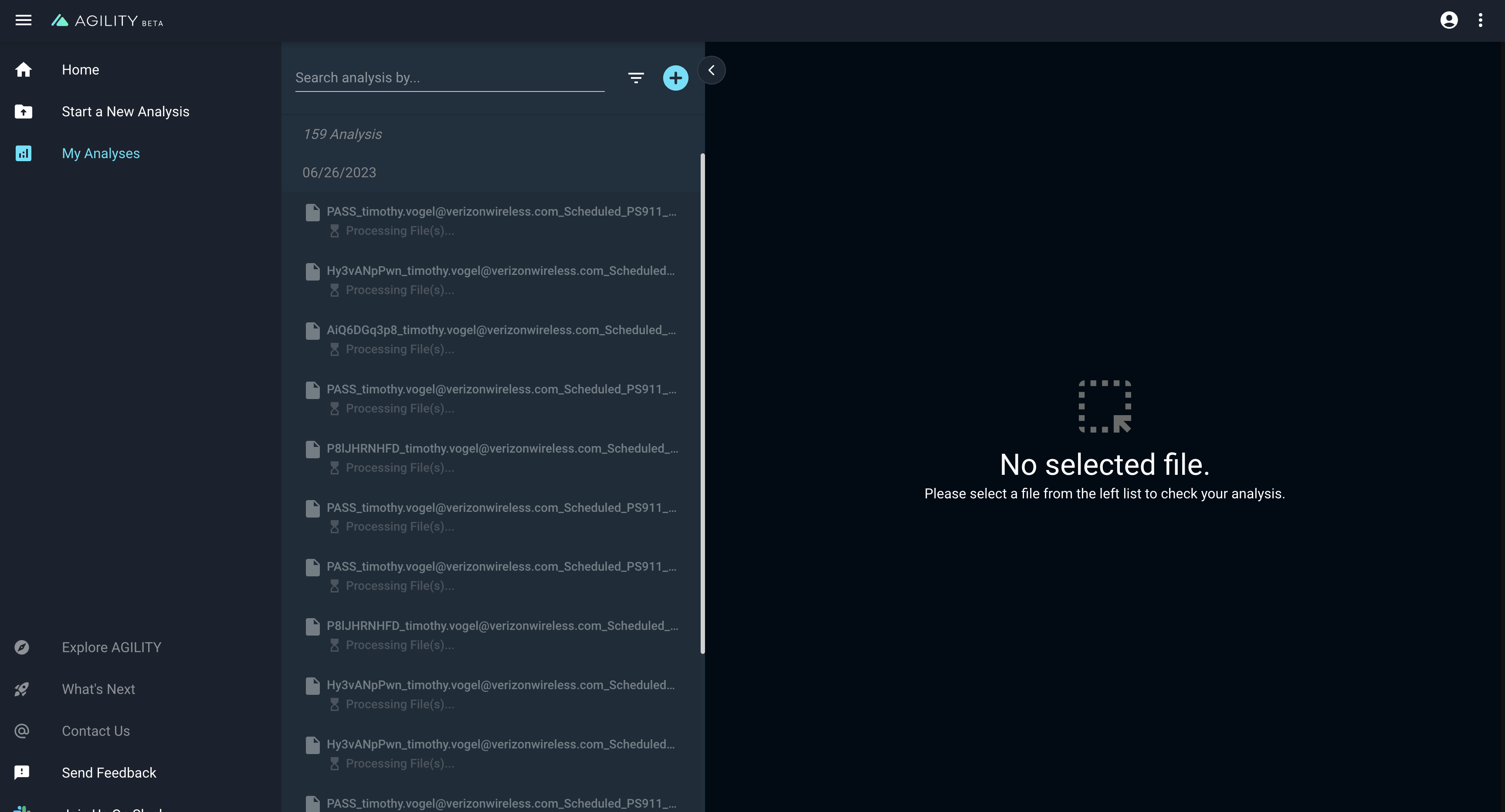

- Meanwhile, in AGILITY UI, the analysis being created under your user are shown in the My Analyses tab:

- To stop the pipeline, click on the stop button:

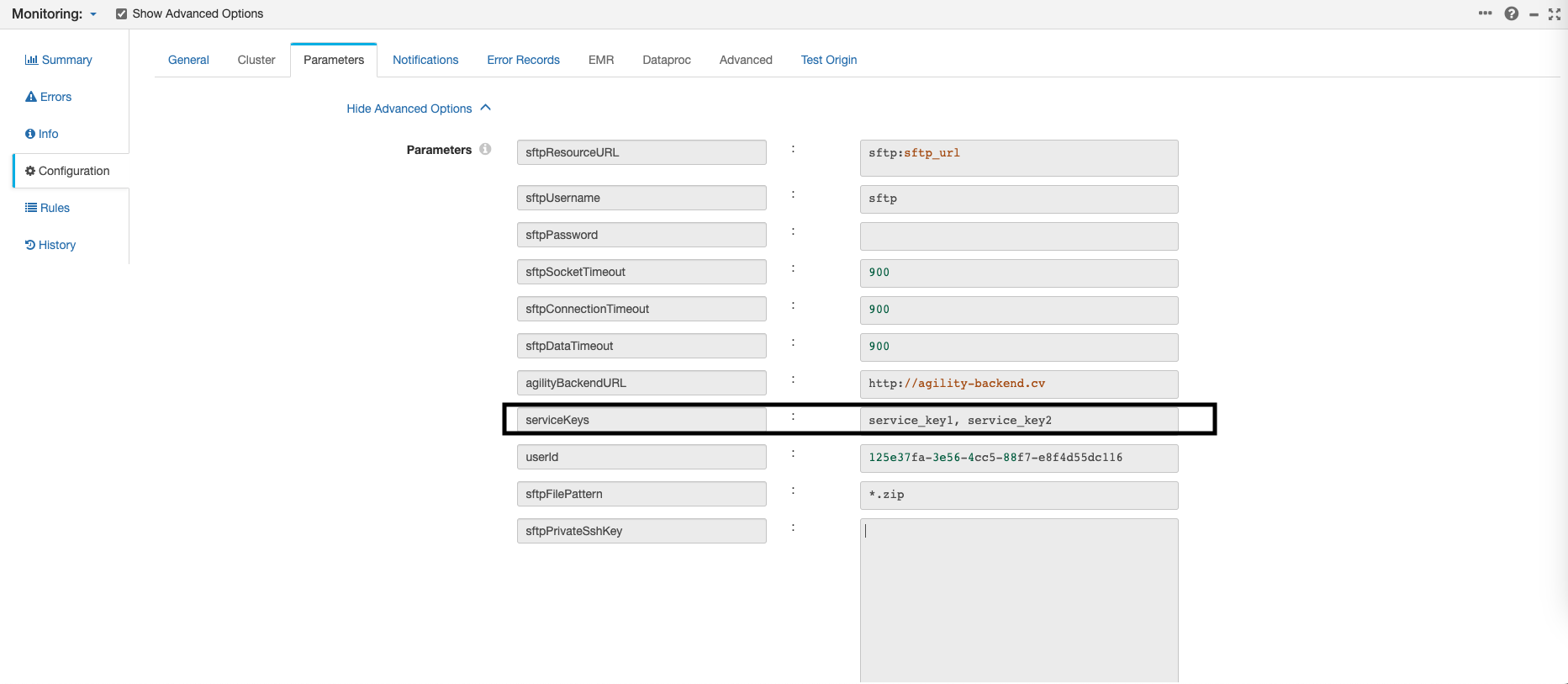

- To update the parameters of that pipeline, go to configuration, then parameters.