agility-docs

Install AGILITY Monitoring on Kubernetes

Prerequisites

Before installing it, make sure you have the following prerequisites:

-

Admin Permissions. You should have Kubernetes administrative permissions to perform the installation.

-

Helm. Make sure you have Helm version 3.7.1 or higher installed.

-

B-Yond Registry Credentials. To install AGILITY, you will need the B-Yond registry credentials (username and password) provided by B-Yond customer support.

Please ensure that you have fulfilled all these prerequisites before moving on to the next step.

Helm Charts Download

Contact customer support to determine the version of AGILITY to deploy.

mkdir -p agility-monitoring-charts && cd agility-monitoring-charts

Pull the charts:

export AGILITY_MONITORING_VERSION=1.0.8

export HELM_EXPERIMENTAL_OCI=1

export OCI_USERNAME="my-registry-username"

export OCI_AUTH_TOKEN="my-registry-password"

export OCI_EMAIL="example@my-company.com"

rm -rf {agility-opentelemetry,agility-metrics,agility-logging,agility-observability}

helm registry login -u "${OCI_USERNAME}" -p "${OCI_AUTH_TOKEN}" iad.ocir.io

helm pull --untar --untardir ./ --version "${AGILITY_MONITORING_VERSION}" oci://iad.ocir.io/idcci80yilhd/agility/agility-monitoring/helm-charts/agility-opentelemetry

helm pull --untar --untardir ./ --version "${AGILITY_MONITORING_VERSION}" oci://iad.ocir.io/idcci80yilhd/agility/agility-monitoring/helm-charts/agility-metrics

helm pull --untar --untardir ./ --version "${AGILITY_MONITORING_VERSION}" oci://iad.ocir.io/idcci80yilhd/agility/agility-monitoring/helm-charts/agility-observability

helm pull --untar --untardir ./ --version "${AGILITY_MONITORING_VERSION}" oci://iad.ocir.io/idcci80yilhd/agility/agility-monitoring/helm-charts/agility-logging

Deploy agility-opentelemetry

The agility-metrics deployment includes the following components:

- Opentelemetry-collector: Collector for metrics and traces.

To deploy the agility-opentelemetry chart, follow these steps:

-

Create the target namespace (throughout this document is assumed to be

monitoring)kubectl create namespace monitoring -

Create the

imagePullSecretto pull images from the B-Yond Container registry:kubectl --namespace monitoring create secret docker-registry byond-container-registry-credential \ --docker-server=iad.ocir.io \ --docker-username="${OCI_USERNAME}" \ --docker-password="${OCI_AUTH_TOKEN}" \ --docker-email="${OCI_EMAIL}" -

Create an override values file (options available in the agility-opentelemetry chart)

cat <<EOF> agility-opentelemetry-values-overrides.yaml opentelemetry-collector: imagePullSecrets: - name: byond-container-registry-credential EOF -

Run the Helm command to deploy agility-opentelemetry:

helm -n monitoring upgrade --install --create-namespace agility-opentelemetry ./agility-opentelemetry --values agility-opentelemetry-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.The following is an example:

kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-opentelemetry NAME READY STATUS RESTARTS AGE agility-opentelemetry-867f969844-gmwnp 1/1 Running 0 10s

Deploy agility-metrics

The agility-metrics deployment includes the following components:

- Prometheus: For metrics storage.

- Grafana: For visualization of metrics and traces.

- Alert manager: For alert management

To deploy the agility-metrics chart, follow these steps:

-

Create the target namespace (throughout this document is assumed to be

monitoring)kubectl create namespace monitoring -

Create the

imagePullSecretdocker secret to pull images.kubectl --namespace monitoring create secret docker-registry byond-container-registry-credential \ --docker-server=iad.ocir.io \ --docker-username="${OCI_USERNAME}" \ --docker-password="${OCI_AUTH_TOKEN}" \ --docker-email="${OCI_EMAIL}" -

Create the Grafana admin secret (ensure you backup this value):

kubectl --namespace monitoring create secret generic agility-metrics \ --from-literal=grafana-admin-user=admin --from-literal=grafana-admin-password=changeit -

Create an overrides values file. (Options available in the agility-metrics chart):

NOTES

- Adjust volumes sizes. Default values are recommended for standard usage.

- Prometheus retention is 10d and size is 30 GB

- Persistence in Grafana is enabled in 1 GB

cat <<EOF> agility-metrics-values-overrides.yaml kube-prometheus-stack: prometheus: prometheusSpec: retentionSize: 30GiB retention: 10d grafana: grafana.ini: smtp: enabled: false smtp: existingSecret: "" persistence: enabled: true type: statefulset size: 1Gi EOF -

Run the Helm command to deploy agility-metrics:

helm -n monitoring upgrade --install --create-namespace agility-metrics ./agility-metrics --values agility-metrics-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.The following is an example:

% kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-metrics NAME READY STATUS RESTARTS AGE agility-metrics-grafana-0 2/2 Running 0 40s agility-metrics-kube-prome-operator-576865799f-z6c68 1/1 Running 0 38s agility-metrics-kube-state-metrics-7c6797776-6bfg2 1/1 Running 0 2m agility-metrics-prometheus-node-exporter-2dvx9 1/1 Running 0 40s agility-metrics-prometheus-node-exporter-9plfd 1/1 Running 0 40s agility-metrics-prometheus-node-exporter-hw4dd 1/1 Running 0 40s agility-metrics-prometheus-node-exporter-n6hms 1/1 Running 0 40s agility-metrics-prometheus-node-exporter-tr67p 1/1 Running 0 40s agility-metrics-prometheus-node-exporter-xtf9m 1/1 Running 0 40s

Alerting (Optional)

-

Add the following configuration for Grafana alerting. Set the following override file:

cat <<EOF> agility-metrics-values-overrides.yaml kube-prometheus-stack: prometheus: prometheusSpec: retentionSize: 30GiB retention: 10d grafana: grafana.ini: smtp: enabled: false host: <yoursmtpserver> from_address: <yournoreplyaddress> skip_verify: false from_name: Grafana smtp: existingSecret: grafana-alerting-smtp # -> If you don't have a secret for your smtp server, please set it as "" persistence: enabled: true type: statefulset size: 1Gi EOF -

Create secret for smtp server (Optional)

kubectl -n monitoring create secret generic grafana-alerting-smtp --from-literal=user=user --from-literal=password=yourpassword secret/grafana-alerting-smtp created -

Run the Helm command to deploy agility-metrics and apply the new configuration:

helm -n monitoring upgrade --install --create-namespace agility-metrics ./agility-metrics --values agility-metrics-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.The following is an example:

% kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-metrics NAME READY STATUS RESTARTS AGE agility-metrics-grafana-0 2/2 Running 0 40s

Now, we need to create a contact point and notification policy to complete the installation. Due to the implementation of this type of object, we recommend to do it from the Grafana UI.

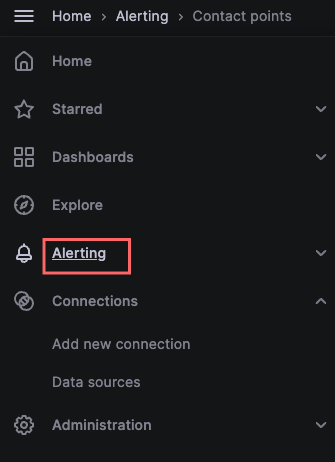

To create a contact point we go to the following menu.

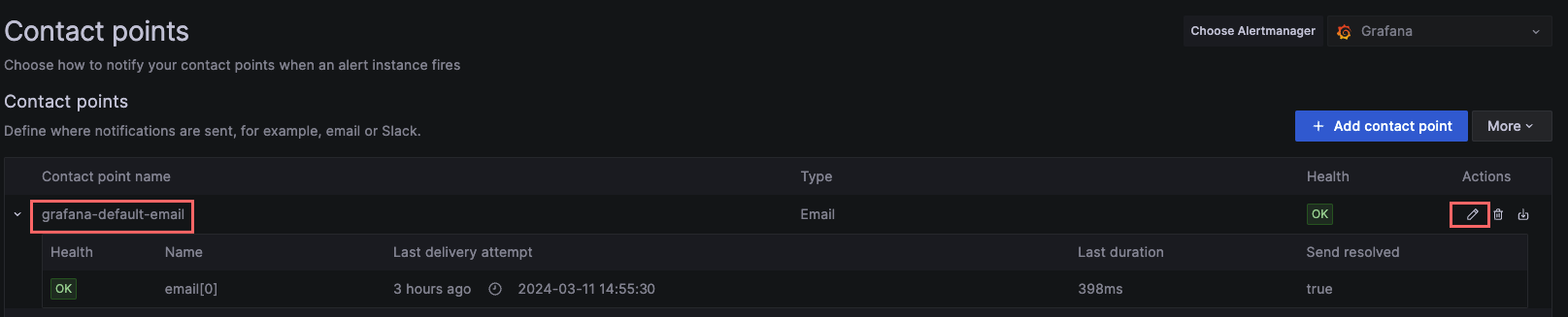

For this example we’re going to use the default contact point that comes with Grafana. So we edit the contact point and add an email address where we’ll like to receive the alerts.

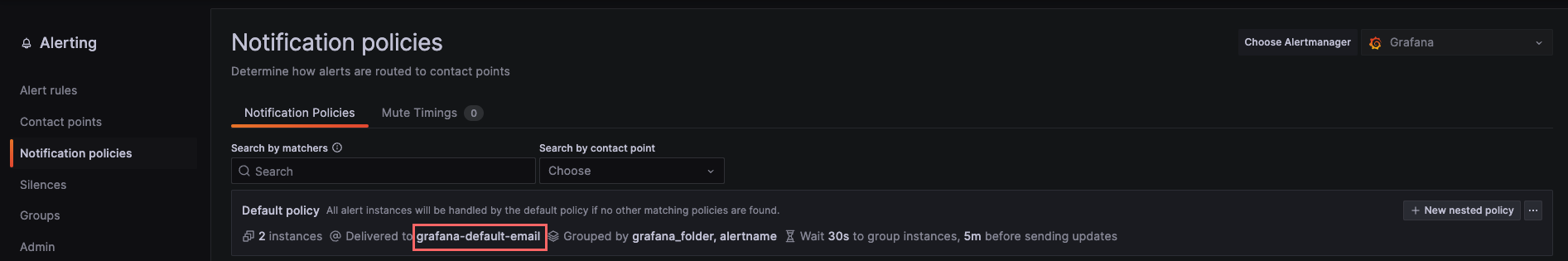

About notification policies, we already have one created an pointing to the default contact point. So we don’t need to create a new one.

From now on, once the alert triggers, we should start receiving emails into the address we configured.

S3 bucket for alerting (Optional)

-

Add the following configuration for Thanos and Prometheus. Set the following override file:

cat <<EOF> agility-metrics-values-overrides.yaml # Thanos services deployment. thanos: enabled: true kube-prometheus-stack: prometheus: prometheusSpec: # For migration purposes, set the disableCompaction to true. Once completed turn it back to false. # disableCompaction: true # Configuration for Thanos sidecar thanos: image: gcr.io/byond-infinity-platform/agility-monitoring/thanos:0.33.0-debian-11-r1 objectStorageConfig: # blob storage configuration to upload metrics existingSecret: key: objstore.yml name: thanos-objstore-secret # For migration purposes. Uncomment to move all chunks from Prometheus to Thanos Bucket. Once the data is there comment again. # additionalArgs: # - name: "shipper.upload-compacted" thanosService: enabled: true thanosServiceMonitor: enabled: true EOF -

Create secret template for contact details used by Thanos and Prometheus.

% cat <<EOF> thanos-objstore-secret.yaml config: access_key: <access-key> bucket: <bucket-name> endpoint: <endpoint> region: <region> secret_key: <secret-access_key> type: S3 prefix: "<prefix>" -

Create secret using the previous template.

kubectl -n monitoring create secret generic thanos-objstore-secret --from-file=objstore.yml=thanos-objstore-secret.yaml -

Run the Helm command to deploy agility-metrics and apply the new configuration:

helm -n monitoring upgrade --install --create-namespace agility-metrics ./agility-metrics --values agility-metrics-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.You can check also the new thanos services running:

% kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-metrics NAME READY STATUS RESTARTS AGE agility-metrics-thanos-query-586cbfb95-gxflt 1/1 Running 0 26d agility-metrics-thanos-query-frontend-55445fcf4-v7l89 1/1 Running 0 26d agility-metrics-thanos-storegateway-0 1/1 Running 0 73m prometheus-agility-metrics-kube-prome-prometheus-0 3/3 Running 2 (3d19h ago) 11dAlso prometheus will have another container (thanos-sidecar) running.

Deploy agility-logging

The agility-logging deployment includes the following components:

- Grafana Loki: For log storage.

- Promtails: For log collection.

To deploy the agility-logging chart, follow these steps:

-

Create the target namespace (throughout this document is assumed to be

monitoring)kubectl create namespace monitoring -

Create the

imagePullSecretdocker secret to pull images.kubectl --namespace monitoring create secret docker-registry byond-container-registry-credential \ --docker-server=iad.ocir.io \ --docker-username="${OCI_USERNAME}" \ --docker-password="${OCI_AUTH_TOKEN}" \ --docker-email="${OCI_EMAIL}" -

Create an override values file (options available in the agility chart):

NOTES

- Default values are recommended for standard usage.

- Loki retention is 2 weeks

cat <<EOF> agility-logging-values-overrides.yaml # Configuration for the loki dependency loki: enabled: true loki: image: tag: 2.9.4 storage: type: 'filesystem' # Configuration for promtail dependency promtail: enabled: true image: tag: 2.9.3 -

Run the Helm command to deploy it:

helm -n monitoring upgrade --install --create-namespace agility-logging ./agility-logging --values agility-logging-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.The following is an example:

kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-logging NAME READY STATUS RESTARTS AGE agility-logging-0 1/1 Running 0 11d agility-logging-gateway-85d746679d-hhzht 1/1 Running 0 11d agility-logging-promtail-fgbfd 1/1 Running 0 11d agility-logging-promtail-wbsmv 1/1 Running 0 11d agility-logging-promtail-zr5bx 1/1 Running 0 11d

S3 bucket for logging (Optional)

-

Add the following configuration for Loki. Set the following override file:

cat <<EOF> agility-logging-values-overrides.yaml loki: loki: storage: bucketNames: chunks: <bucket-name> ruler: <bucket-name> admin: <bucket-name> type: s3 s3: endpoint: <bucket-enpoint> http_config: insecure_skip_verify: true insecure: false region: <region> s3: <bucket-name> s3forcepathstyle: false accessKeyId: ${AWS_ACCESS_KEY_ID} secretAccessKey: ${AWS_SECRET_ACCESS_KEY} singleBinary: replicas: 1 extraArgs: - '-config.expand-env=true' extraEnv: - name: AWS_ACCESS_KEY_ID valueFrom: secretKeyRef: name: s3-bucket key: access_key - name: AWS_SECRET_ACCESS_KEY valueFrom: secretKeyRef: name: s3-bucket key: secret_key EOF -

Create secret for s3 credentials server

kubectl -n monitoring create secret generic s3-bucket --from-literal=access_key=<access_key> --from-literal=secret_key=<secret_key> secret/s3-bucket created -

Run the Helm command to deploy agility-logging and apply the new configuration:

helm -n monitoring upgrade --install --create-namespace agility-logging ./agility-logging --values agility-logging-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.The following is an example:

kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-logging NAME READY STATUS RESTARTS AGE agility-logging-0 1/1 Running 0 11d agility-logging-gateway-85d746679d-hhzht 1/1 Running 0 11d agility-logging-promtail-fgbfd 1/1 Running 0 11d agility-logging-promtail-wbsmv 1/1 Running 0 11d agility-logging-promtail-zr5bx 1/1 Running 0 11d

Deploy agility-observability

The agility-observability deployment includes the following components:

- Grafana Loki: For log storage.

- Promtails: For log collection.

To deploy the agility-observability chart, follow these steps:

-

Create the target namespace (throughout this document is assumed to be

monitoring)kubectl create namespace monitoring -

Create the

imagePullSecretdocker secret to pull images.kubectl --namespace monitoring create secret docker-registry byond-container-registry-credential \ --docker-server=iad.ocir.io \ --docker-username="${OCI_USERNAME}" \ --docker-password="${OCI_AUTH_TOKEN}" \ --docker-email="${OCI_EMAIL}" -

Create an override values file (options available in the agility chart):

NOTES

- Default values are recommended for standard usage.

- Tempo retention and visualization is 2 weeks

cat <<EOF> agility-observability-values-overrides.yaml tempo: tempo: pullSecrets: - byond-container-registry-credential retention: "336h" queryFrontend: search: max_duration: 336h EOF -

Run the Helm command to deploy it:

helm -n monitoring upgrade --install --create-namespace agility-observability ./agility-observability --values agility-observability-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.The following is an example:

kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-observability NAME READY STATUS RESTARTS AGE agility-observability-tempo-0 1/1 Running 0 13d

S3 bucket for observability (Optional)

-

Add the following configuration for Tempo. Set the following override file:

cat <<EOF> agility-observability-values-overrides.yaml tempo: tempo: storage: trace: pool: max_workers: 100 queue_depth: 10000 backend: s3 s3: access_key: ${AWS_ACCESS_KEY_ID} secret_key: ${AWS_SECRET_ACCESS_KEY} bucket: <bucket-name> endpoint: <bucket-endpoint> region: <region> prefix: <bucket-prefix> extraArgs: { config.expand-env=true } extraEnv: - name: AWS_ACCESS_KEY_ID valueFrom: secretKeyRef: name: s3-bucket key: access_key - name: AWS_SECRET_ACCESS_KEY valueFrom: secretKeyRef: name: s3-bucket key: secret_key EOF -

Create secret for s3 credentials server

kubectl -n monitoring create secret generic s3-bucket --from-literal=access_key=<access_key> --from-literal=secret_key=<secret_key> secret/s3-bucket created -

Run the Helm command to deploy it:

helm -n monitoring upgrade --install --create-namespace agility-observability ./agility-observability --values agility-observability-values-overrides.yaml -

Wait until all Pods are in

RunningorCompletedstate and allRunningitems show all expected containers running underREADYcolumn. how all expected containers running underREADYcolumn.The following is an example:

kubectl -n monitoring get pods -l app.kubernetes.io/instance=agility-observability NAME READY STATUS RESTARTS AGE agility-observability-tempo-0 1/1 Running 0 13d

Test & Usage

-

Once it’s is deployed, you can connect to Grafana by using port-forwarding:

export HTTP_SERVICE_PORT=$(kubectl get --namespace monitoring -o jsonpath="{.spec.ports[?(@.name=='http-web')].port}" services agility-metrics-grafana) kubectl port-forward --namespace monitoring svc/agility-metrics-grafana 3000:${HTTP_SERVICE_PORT} -

Access AGILITY in your browser at http://localhost:3000/metrics/

The user and password were created in the secret on agility-metrics, use the same user and password.

username: your-user

password: your-password

To stop the port-forwarding process, press

Ctrl+Cin the terminal where you executed the command.

Uninstall

By following these steps, you will successfully uninstall the AGILITY deployment and associated resources:

-

Delete the Helm charts at the namespace level:

helm -n monitoring delete agility-observability --wait helm -n monitoring delete agility-logging --wait helm -n monitoring delete agility-metrics --wait helm -n monitoring delete agility-opentelemetry --wait -

Remove namespace

kubectl delete namespace monitoring

Appendix A: Prometheus rules

By default, we implement the following prometheus-rules at agility-metrics chart level:

agility-metrics/resources/prometheus-rules

├── basic-linux.yaml

├── kubernetes.yaml

└── postgresql.yaml

Disabling rules

These rules can be turn off in the override file we’ve just used. For this case, let’s suppose we’ll like to disable the basic-linux rules:

-

This is the rule we’ll like to remove.

kubectl get prometheusrules -n monitoring NAME AGE agility-metrics-basic-linux 5d22h -

Since we’ll like to disable it, we add the following lines into your overrides values file: agility-metrics-values-overrides.yaml

# Enabled prometheus rules extraPrometheusRules: basic-linux: false -

Run the Helm command to deploy agility-metrics:

helm -n monitoring upgrade --install --create-namespace agility-metrics ./agility-metrics --values agility-metrics-values-overrides.yaml -

We wait until the installation is complete and then we check again the object. We shouldn’t see it.

kubectl get prometheusrules/agility-metrics-basic-linux -n monitoring Error from server (NotFound): prometheusrules.monitoring.coreos.com "agility-metrics-basic-linux" not found

Adding more prometheus rules

Let’s suppose now we’ll like to add more rules for this environment. Please follow these instructions:

-

We go to the agility-metrics/resources/prometheus-rules and add our new yaml file with the rule following the same format of the other ones.

agility-metrics/resources/prometheus-rules ├── basic-linux.yaml ├── kubernetes.yaml ├── postgresql.yaml └── new-rule.yaml -

Then we add the following lines into your overrides values file: agility-metrics-values-overrides.yaml. It’s important to use the name name as the file but without the yaml extension.

# Enabled prometheus rules extraPrometheusRules: new-rule: true -

Run the Helm command to deploy agility-metrics:

helm -n monitoring upgrade --install --create-namespace agility-metrics ./agility-metrics --values agility-metrics-values-overrides.yaml -

We wait until the installation is complete and then we check again the object.

kubectl get prometheusrules -n monitoring NAME AGE agility-metrics-new-rule 10s

Appendix B: Dashboards

By default, we implement the following dashboards at agility-metrics chart level:

agility-metrics/resources/dashboards

└── common

├── agility-data-pipeline-observability.json

└── monitoring-observability.json

Adding more dashboards

Let’s suppose now we’ll like to add more dashboards for this environment. Please follow these instructions:

-

We go to the agility-metrics/resources/dashboards and add your new dashboard in json file format.

agility-metrics/resources/dashboards └── common ├── agility-data-pipeline-observability.json ├── monitoring-observability.json └── new-dashboard.json -

Run the Helm command to deploy agility-metrics:

helm -n monitoring upgrade --install --create-namespace agility-metrics ./agility-metrics --values agility-metrics-values-overrides.yaml -

We should see the new dashboard created in the cluster.

kubectl get configmap -n monitoring NAME DATA AGE agility-metrics-new-dashboard 1 14s

Appendix C: PagerDuty

This functionality is added by the prometheus-pagerduty-exporter https://wasfree.github.io/prometheus-pagerduty-exporter. This sub-application, called agility-pagerduty, provides a connection between Prometheus and Pager Duty, which allows this last to save its metrics inside Prometheus. Then, we can explore the metrics from Pager Duty and create dashboards with it.

To enable this component, proceed as follow.

-

Create the Pager duty API TOKEN secret:

kubectl --namespace monitoring create secret generic agility-pagerduty \ --from-literal=pagerduty_authtoken=<YOUR_PAGERDUTY_API_TOKEN> -

Go to your overrides agility-metrics-values-overrides.yaml file, and add the following lines.

prometheus-pagerduty-exporter: enabled: true config: authtokenSecret: name: agility-pagerduty key: pagerduty_authtoken -

Run the Helm command to deploy agility-metrics:

helm -n monitoring upgrade --install --create-namespace agility-metrics ./agility-metrics --values agility-metrics-values-overrides.yaml -

We wait until the installation is complete and then we check again the object.

k get pods -n monitoring NAME READY STATUS RESTARTS AGE agility-pagerduty-prometheus-pagerduty-exporter-888d9f998-w787d 1/1 Running 0 10s